LLM Security, Vulnerabilities, and Models

Open Worldwide Application Security Project (OWASP) Top 10 for Large Language Model Applications

the potential security risks when deploying and managing Large Language Models (LLMs). The project provides a list of the top 10 most critical vulnerabilities often seen in LLM applications, highlighting their potential impact, ease of exploitation, and prevalence in real-world applications. Examples of vulnerabilities include prompt injections, data leakage, inadequate sandboxing, and unauthorized code execution, among others. The goal is to raise awareness of these vulnerabilities, suggest remediation strategies, and ultimately improve the security posture of LLM applications.

OpenAI has a Model Spec intended to precisely outline how its models work. Similar to Anthropic’s Constitutional AI, it’s a series of internal prompts and other measures that guide answers toward “ethical” and more helpful results.

For example:

- Follow the chain of command

- Comply with applicable laws

- Don’t provide information hazards

- Respect creators and their rights

- Protect people’s privacy

- Don’t respond

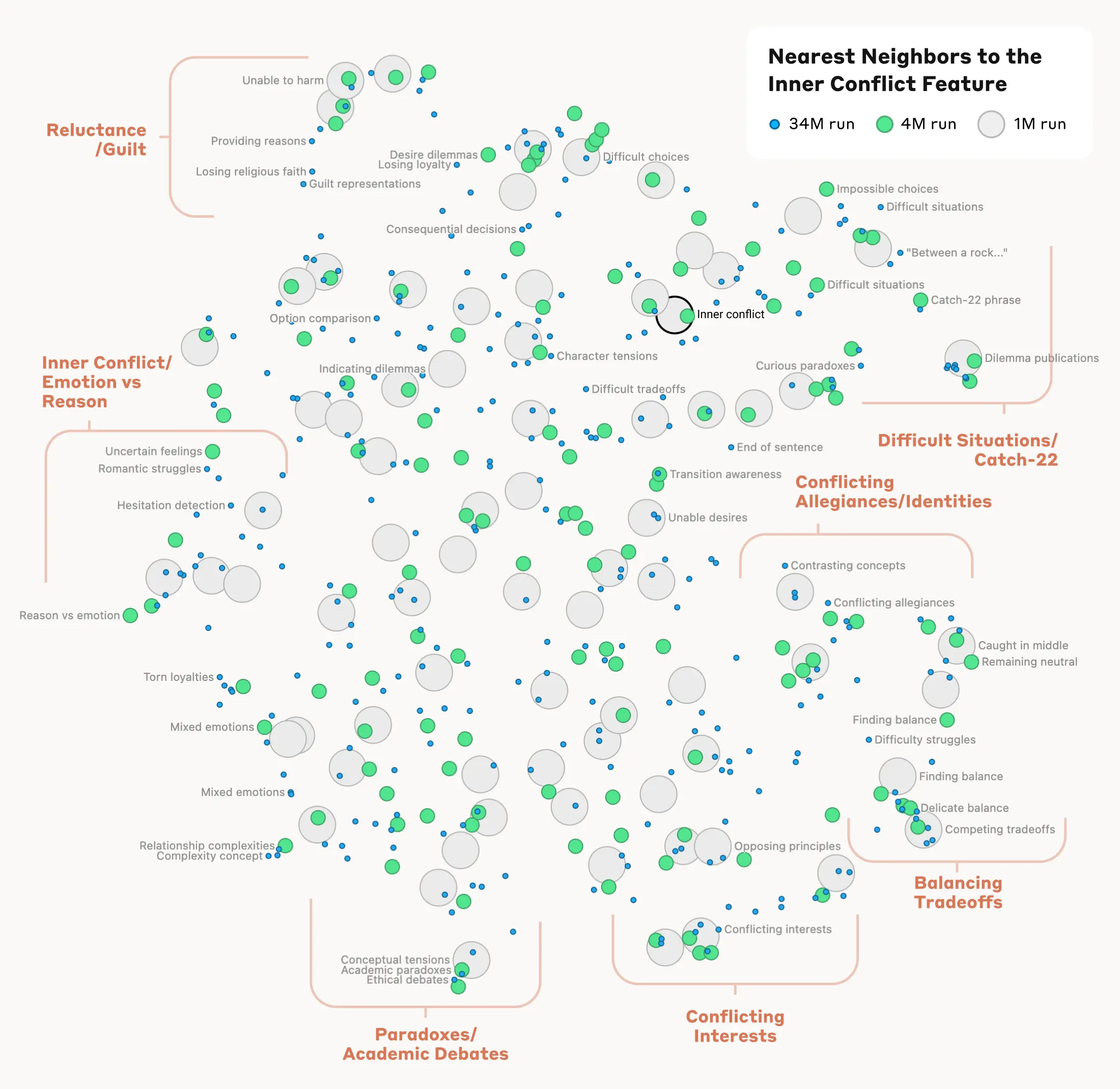

Anthropic goes further, attempting to model how its Claude product works and showing precisely the chain of “neurons” that are activated in responses that include words like “Golden Gate Bridge” or when discussing gender bias.